Main Result

Although BERT-style encoder models are heavily used in NLP research, many researchers do not pretrain their own BERTs from scratch due to the high cost of training. In the past half-decade since BERT first rose to prominence, many advances have been made with other transformer architectures and training configurations that have yet to be systematically incorporated into BERT.

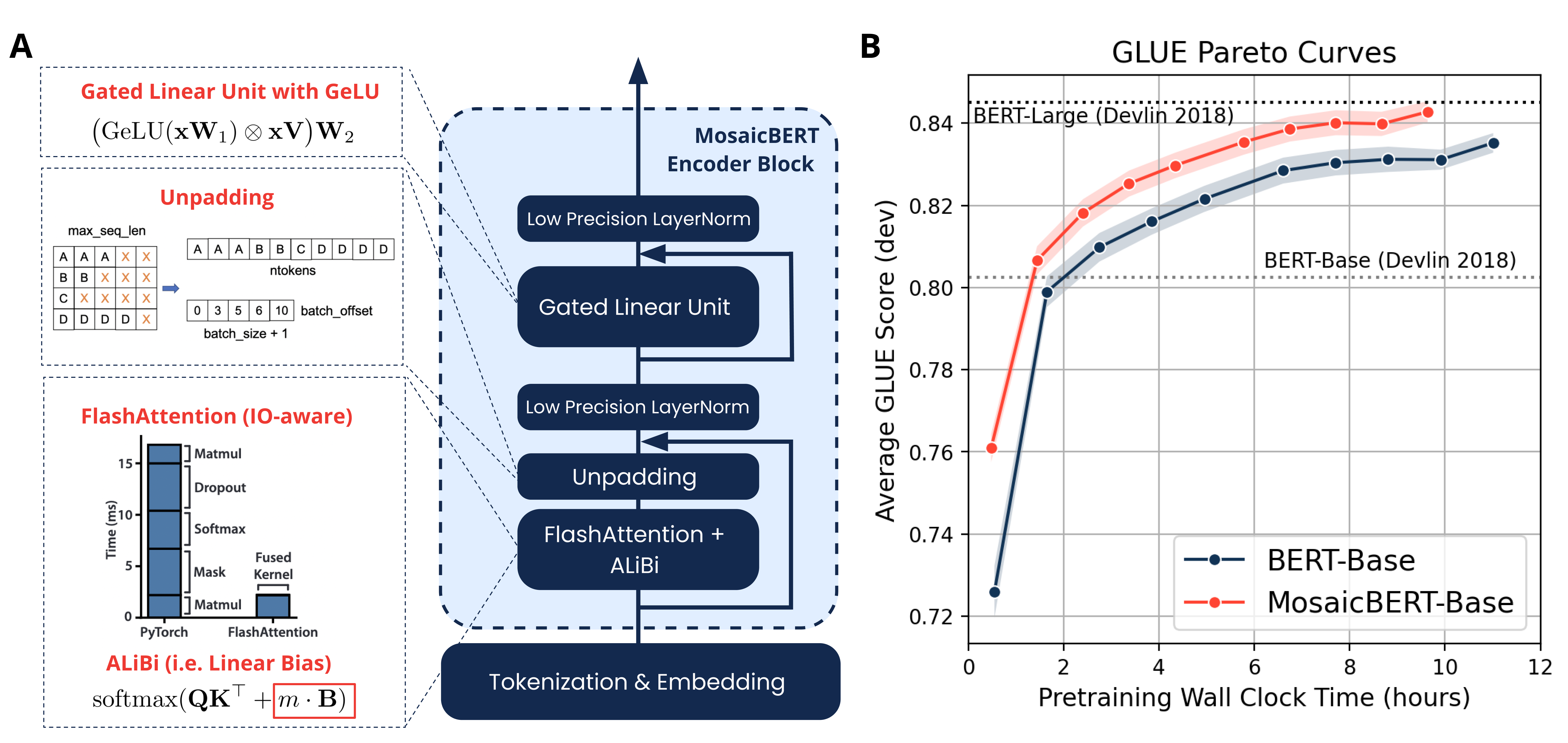

Here, we introduce MosaicBERT, a BERT-style encoder architecture and training recipe that is empirically optimized for fast pretraining. This efficient architecture incorporates FlashAttention, Attention with Linear Biases (ALiBi), Gated Linear Units (GLU), a module to dynamically remove padded tokens, and low precision LayerNorm into the classic transformer encoder block. The training recipe includes a 30% masking ratio for the Masked Language Modeling (MLM) objective, bfloat16 precision, and vocabulary size optimized for GPU throughput, in addition to best-practices from RoBERTa and other encoder models.

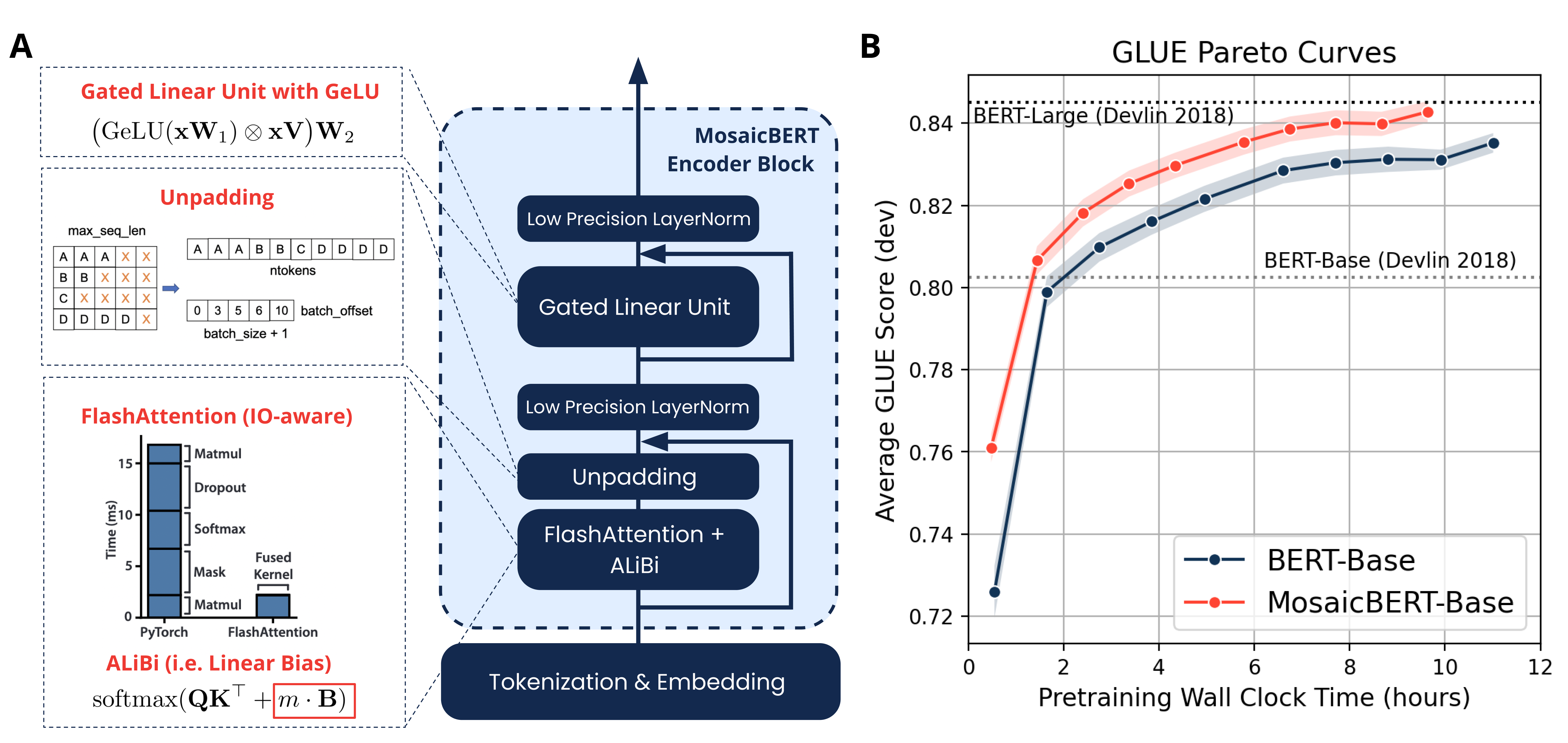

When pretrained from scratch on the C4 dataset, this base model achieves a downstream average GLUE score of 79.6 in 1.13 hours on 8 A100 80 GB GPUs at a cost of roughly $20. We plot extensive accuracy vs. pretraining speed Pareto curves and show that MosaicBERT base and large are consistently Pareto optimal when compared to a competitive BERT base and large. This empirical speed up in pretraining enables researchers and engineers to pretrain custom BERT-style models at low cost instead of finetune on existing generic models. We open source our model weights and code.

@article{portes2023MosaicBERT,

title={MosaicBERT: A Bidirectional Encoder Optimized for Fast Pretraining},

author={Jacob Portes, Alexander R Trott, Sam Havens, Daniel King, Abhinav Venigalla,

Moin Nadeem, Nikhil Sardana, Daya Khudia, Jonathan Frankle},

journal={NeuRIPS https://openreview.net/pdf?id=5zipcfLC2Z},

year={2023},

}